Synaptic

Important: Synaptic 2.x is in stage of discussion now! Feel free to participate

Synaptic is a javascript neural network library for node.js and the browser , its generalized algorithm is architecture-free, so you can build and train basically any type of first any or even secondorder ural ural sarch.

This library includes a few built-in architectures like multilayer perceptrons , multilayer long-short term memory networks (LSTM), liquid state machines or Hopfield net like solving an XOR, completing a Distracted Sequence Recall task or an Embedded Reber Grammar test, so you can easily test and compare the performance of different architectures.

The algorithm implemented by this library has been taken from Derek D. Monner's paper:

A generalized LSTM-like training algorithm for second-order recurrent neural networks

There are references to the equations in that paper commented through the source code.

Introduction

If you have no prior knowledge about Neural Networks, you should start by reading this guide .

If you want a practical example on how to feed data to a neural network, then take a look at this article .

You may also want to take a look at this article .

Demos

- Solve an XOR

- Discrete Sequence Recall Task

- Learn Image Filters

- Paint an Image

- Self Organizing Map

- Read from Wikipedia

- Creating a Simple Neural Network (Video)

The source code of these demos can be found in this branch .

Getting started

To try out the examples, checkout the gh-pages branch.

git checkout gh-pages

Other languages

This README is also available in other languages.

- 中文 Simplified | 中文文件, thanks to @noraincode .

- Chinese Traditional | 繁體中文, 由@NoobTW .

- Japanese | 日本語, thanks to @oshirogo .

Overview

Installation

In node

You can install synaptic with npm :

1npm install synaptic --save

In the browser

You can install synaptic with bower :

1bower install synaptic

Or you can simply use the CDN link, kindly provided by CDNjs

1<script src="https://cdnjs.cloudflare.com/ajax/libs/synaptic/1.1.4/synaptic.js"></script>

Usage

1

2

3

4

5

6var synaptic = require('synaptic'); // this line is not needed in the browser

var Neuron = synaptic.Neuron,

Layer = synaptic.Layer,

Network = synaptic.Network,

Trainer = synaptic.Trainer,

Architect = synaptic.Architect;

Now you can start to create networks, train them, or use built-in networks from the Architect .

Examples

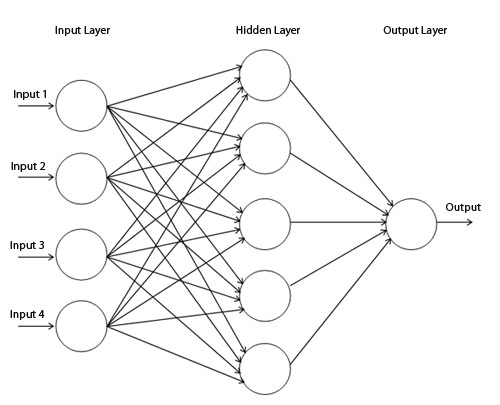

Perceptron

This is how you can create a simple perceptron :

.

.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22function Perceptron(input, hidden, output)

{

// create the layers

var inputLayer = new Layer(input);

var hiddenLayer = new Layer(hidden);

var outputLayer = new Layer(output);

// connect the layers

inputLayer.project(hiddenLayer);

hiddenLayer.project(outputLayer);

// set the layers

this.set({

input: inputLayer,

hidden: [hiddenLayer],

output: outputLayer

});

}

// extend the prototype chain

Perceptron.prototype = new Network();

Perceptron.prototype.constructor = Perceptron;

Now you can test your new network by creating a trainer and teaching the perceptron to learn an XOR

1

2

3

4

5

6

7

8

9var myPerceptron = new Perceptron(2,3,1);

var myTrainer = new Trainer(myPerceptron);

myTrainer.XOR(); // { error: 0.004998819355993572, iterations: 21871, time: 356 }

myPerceptron.activate([0,0]); // 0.0268581547421616

myPerceptron.activate([1,0]); // 0.9829673642853368

myPerceptron.activate([0,1]); // 0.9831714267395621

myPerceptron.activate([1,1]); // 0.02128894618097928

Long Short-Term Memory

This is how you can create a simple long short-term memory network with input gate, forget gate, output gate, and peephole connections:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46function LSTM(input, blocks, output)

{

// create the layers

var inputLayer = new Layer(input);

var inputGate = new Layer(blocks);

var forgetGate = new Layer(blocks);

var memoryCell = new Layer(blocks);

var outputGate = new Layer(blocks);

var outputLayer = new Layer(output);

// connections from input layer

var input = inputLayer.project(memoryCell);

inputLayer.project(inputGate);

inputLayer.project(forgetGate);

inputLayer.project(outputGate);

// connections from memory cell

var output = memoryCell.project(outputLayer);

// self-connection

var self = memoryCell.project(memoryCell);

// peepholes

memoryCell.project(inputGate);

memoryCell.project(forgetGate);

memoryCell.project(outputGate);

// gates

inputGate.gate(input, Layer.gateType.INPUT);

forgetGate.gate(self, Layer.gateType.ONE_TO_ONE);

outputGate.gate(output, Layer.gateType.OUTPUT);

// input to output direct connection

inputLayer.project(outputLayer);

// set the layers of the neural network

this.set({

input: inputLayer,

hidden: [inputGate, forgetGate, memoryCell, outputGate],

output: outputLayer

});

}

// extend the prototype chain

LSTM.prototype = new Network();

LSTM.prototype.constructor = LSTM;

These are examples for explanatory purposes, the Architect already includes Multilayer Perceptrons and Multilayer LSTM network architectures.

Contribute

Synaptic is an Open Source project that started in Buenos Aires, Argentina. Anybody in the world is welcome to contribute to the development of the project.

If you want to contribute feel free to send PR's, just make sure to run npm run test and npm run build before submitting it. This way you'll run all the test specs and build the web distribution files.

Support

If you like this project and you want to show your support, you can buy me a beer with magic internet money :

1

2

3

4BTC: 16ePagGBbHfm2d6esjMXcUBTNgqpnLWNeK

ETH: 0xa423bfe9db2dc125dd3b56f215e09658491cc556

LTC: LeeemeZj6YL6pkTTtEGHFD6idDxHBF2HXa

XMR: 46WNbmwXpYxiBpkbHjAgjC65cyzAxtaaBQjcGpAZquhBKw2r8NtPQniEgMJcwFMCZzSBrEJtmPsTR54MoGBDbjTi2W1XmgM

<3